Where Little Computer People Dwell...

Last updated on: 21 May, 2022

…There’s Always A Mess We Have to Clean

I treat my computers like little homes. If I forget about regular spring cleanings, they become literal garbage cans full of unnecessary stuff piling up everywhere I turn my head to. But while I can easily bring my apartment back to its former tidy state in just a few hours, cleaning up digital storage space is neither quick, nor easy. And the truth is, the seldom it’s done, the harder and more time-consuming it becomes.

Believe it or not, the negative effect of digital junk not only may have impact on our work efficiency, but also to some degree on physical and mental wellness. Successfull completion of this task can on the other hand boost our productivity and psychical comfort. I usually do my spring cleaning at least every year, usually around late winter or early spring. Sometimes twice a year. It is at that point when I find myself spending more and more time looking for some document or image that I urgently require, but don’t remember its name, juggling files between hard disks to find enough space to squeeze that 200 GB of simulation cache, or noticing more and more duplicates of various files. And the time spent with the computer on unnecessary tasks means more frustration, and also less time spent on work, physical activities or with family and friends.

I stopped using Windows a long time ago, so while trying to describe digital spring cleaning, I’ll be concentrating exclusively on GNU/Linux operating system. Of course this doesn’t mean that if you’re a Windows user, you will not find anything helpful in this post. Most subjects discussed here, as well as software which I use for cleanup, are more or less software-agnostic.

Types of Junk

Digital junk can take up many forms. I personally divide them into eight main categories: duplicates, similar files, old configuration files, cache, temporary files that were never deleted, ancient logs, core dumps, and finally — miscellaneous files. I sorted them in order of their contribution to the overall mess and, at the same time, an arbitrary resource cost of their removal, beginning with the most difficult and costly clean up task.

File Duplicates

In order to mark file as a duplicate of other file located in the system, computer program must analyze its full content and then compare it to the content of the original file. This is almost always done by comparing hashes of both (or more) files, which means that those files must be read from the start to the end.

The hash is an alphanumerical string calculated by a mathematical formula based on input data and is supposed to be unique to that data. Therefore, two identical data streams (i.e. two files) ideally return the same hash. Different algorithms of calculating hashes exist, some more robust than others, so it’s important to select one that cannot by design return the same hash for two different files (as was the case with CRC-32 or MD5 algorithms) or the chances of this are close to zero. When algorithm returns an identical hash for two completely different input data streams, it’s called a hash collision and in the context of duplicate removal might have disastrous consequences. Therefore, always pick an algorithm that is resistant to collisions.

The second factor when choosing a hash algorithm is its speed. The faster it is, the less time you will have to wait for the program to find duplicates and the less power your computer will use. This is especially important in current times in which energy prices keep getting higher and higher. Some algorithms take advantage of parallelism, some don’t. I like to use Blake3 as it’s resistant to collisions and uses multithreading.

Similar Files

These are files that for a human have perceptually identical content, but which will not produce identical hashes, because they have been transcoded to a different format or modified in some way. Here are some examples:

- Copy of an image, video or audio file that was transcoded to a different format with or without prior modification.

- Modification of an image, video or audio file saved to the same format.

- A project or document file saved with only a minor change which does not justify treating the file as being significantly different in relation to contents of the original file.

Hashing algorithms will see those files as completely different when compared to their original copies, and therefore those algorithms are useless in this context. There are however algorithms that are able to determine a level of similarity of those files based on some distinguishable features. Each media type has its different set of algorithms for that purpose. When it comes to images for example, to detect features that can later be used for comparison operation, you can use scale-invariant feature transform (SFI). For comparing video clips, peak signal-to-noise ratio (PSNR) or structural similarity (SSIM) may come in handy, and I believe both are incorporated into OpenCV library. I’m not sure what algorithms are optimal for determining similarity of audio files though, but most tools that compare audio files for similarity, seem to utilize tag matching, which is not a true comparison of file contents, only it’s metadata.

When it comes to comparing project files, like Houdini’s .hip files, Blender’s .blend scenes or geometry that was written in a binary format, there’s literally no good way of doing it, except of opening the file and browsing its contents with a dedicated computer program.

With ASCII formats it’s possible to run a diff operation, but I strongly discourage it, as some formats allow for a certain degree of freedom in how they describe data (like Wavefront .obj) and two files that might seem different to a diff, might actually return identical results when opened in a dedicated software.

It’s always better to just open such files and see what it contains with one’s own eyes.

Old Configuration Files

This one’s a breeze on *NIX systems, not so easy on Windows.

On GNU/Linux programs usually stick to XDG specification and are putting their configuration files (dotfiles) in $XDG_CONFIG_HOME, which points to $HOME/.config by default, so deleting config residue is just a matter of removing a directory named after program that you uninstalled.

Some programs tend to stick to an orthodox location of dotfiles, which is directly in $HOME, so you may also need to look there.

Depending on how you uninstalled the program (using apt remove or apt purge) it may be required to look into /etc/ and remove the program’s system-wide config from that location as well.

Flatpaks on the other hand store their dotfiles in $HOME/.var/app/program_path/config, but flatpak purges them when you uninstall the program.

This is good and bad at the same time, because if you want to preserve its dotfiles, you need to remember to back them up before running flatpak uninstall.

The worst offenders are usually programs that were ported from Windows.

It’s quite common for those guys to insist on putting their config files in the weirdest locations, like $HOME/Documents or even worse — $HOME/My Documents, but this “tendency” makes them easy to spot.

Cache

By cache I mostly mean simulation or geometry cache created by Houdini or other DCC packages. Where you store it depends on your pipeline, but it’s a good idea to be consistent on where you put it, so it can be easily found and removed when it’s no longer needed. I blast it to kingdom come just before archiving a completed project. Keep in mind though, that random number generators of different CPU architectures (amd64 and M1, for example) may produce different numbers for the same seed. Therefore, cache might be worth keeping if the project is to be opened some time later on a computer with different architecture. Otherwise, the simulation might not look the same after it’s regenerated on such machines.

The other type of cache is the system cache, which for XDG-compliant applications is located in $XDG_CACHE_HOME ($HOME/.cache by default).

These are user-specific non-essential files that a computer program processes on run-time.

They can be removed freely, but a program will probably recreate some of them next time it runs.

I usually leave them alone and only remove those that are a residue of packages I already uninstalled.

Temporary Files

System’s temporary files on GNU/Linux are a non-issue.

They are put in /tmp and removed after each reboot.

For this reason they don’t quite fall into “junk” category of files.

If you’re using a separate /tmp partition, you can completely ignore temporary files.

If on the other hand your /tmp is on the root partition, you’re very tight on free space, and you rarely reboot your system, you might consider cleaning it up from time to time.

However, only as a last resort and only if you know what you’re doing as it’s quite dangerous.

Some of those files can be still in use by programs currently running in memory and removing them might cause those programs to malfunction, which may, or may not, result in minor or some serious trouble.

So, if you’re on GNU/Linux, better don’t touch those files.

If you’re on a distribution that doesn’t clean /tmp by default on a new boot, you can configure it to do this operation.

Some programs which are developed mostly for Windows, might put their temporary files in all weird locations in user-accessible space. This may also apply to programs ported to GNU/Linux. So keep an eye on those. Or even better — don’t use them at all and stick to GNU/Linux native applications.

More problematic are user-generated temporary files.

These are files that you might have once created manually and “just for a moment”, but then forgot to remove.

After a day or two you might still remember that this file was a temporary one, but after a month or half a year, and especially if they grew in numbers — not very likely.

Therefore, it’s very important to either save them in a location that you know it contains only temporary files, name them accordingly (by adding a prefix or suffix, for example), or simply keep a strict discipline and force yourself to always remove such files when they are no longer needed.

I usually save them straight to /tmp, so in case I forget to pulverize them, my system will do it for me on the next boot.

I must say, that it’s very helpful to add /tmp to the list of Places so that it can be easily accessed in file choosers.

According to my observations, the typical location where all manually created “temporaries” are stored en masse, only to be forgotten two seconds later, is of course our favorite and famous desktop.

This is especially true for non-technical people, who are capable of creating impressive quantities of files in that location, while completely ignoring other parts of $HOME, or perhaps rather %USERPROFILE%, as it’s more characteristic for Windows users.

I think that it’s counterproductive, or it doesn’t help with anything we’re trying to do on our computers to say at least.

I constantly try to convince my family members not to do that, but I always fail miserably.

Force of habit always wins.

Oh yes, that’s what we like.

(Edited version of a screenshot originally created by aroth999, CC BY-SA 3.0)

Ancient Logs

All system logs are stored in /var/log and are rotated regularly by the operating system.

Rotation means that after reaching a certain size, file containing logs will be compressed, renamed and a new empty file will be created in its place.

Usually removing old logs doesn’t make much sense, because they take up small amount of storage space.

However, if you have some system issue going on, they can easily balloon to ridiculous sizes, but in this case removing them will not help you much until you resolve the cause of the problem.

When disk space is running critically low on /var partition, or root partition if you didn’t create a separate partition for /var, you may want to look into /var/log/journal, which contains logs written by systemd.

Of all log files, they can grow the most and well over even several gigabytes.

For example, my current journal logs are around 1.5 GB mostly because they are littered by thousands of Got data flow before segment event entries made by gstreamer (which I believe is some kind of a bug in the program), and multiple systemd-coredumps of segfaulting Houdini (it likes to play tricks on me from time to time).

You should not directly delete journal logs, but use appropriate journalctl commands instead.

For example, the journalctl --disk-usage command will tell you how much disk space is used by systemd’s logs.

When you have this information, you can decide whether it’s worth to keep the logs or prune at least part of them.

I recommend keeping as many log entries as possible, because you never know when they might come in handy, but if disk space is critically low and you have exhausted all other means of freeing it, by all means go ahead.

I’m not going to explain in detail how to clean up these logs, but will point you to ArchWiki instead.

Also reading manpages of journalctl might explain a lot.

Core Dumps

Core dumps get written whenever a program crashes, or is terminated abnormally.

They are a recorded state of program’s memory, usually at the time the program has crashed, and they can be used for debugging.

On GNU/Linux with systemd their default location is in /var/lib/systemd/coredump, where they are preserved by systemd for at least 3 days (this depends on systemd’s configuration).

If you think you don’t need them anymore, simply clean them up by deleting their files (requires root permissions).

You can also disable them completely if they’re of no use to you.

Miscellaneous

This category contains minor type of junk, like broken symbolic links, empty files and directories.

Symbolic links get broken when the target file or directory no longer exists. At this point they can be either edited to point at the new file or removed completelely.

With empty files you have to be caucious, because they might have been created by a running daemon (background process) or an application, and when removed, may cause them to malfunction.

This is especially true with file locks (.lock).

Avoid deleting those, unless you know what you are doing and you are sure that it will not cause any harm to your current session.

As far as I’m aware, deleting empty directories isn’t as problematic. They can be freely removed without any repercussions. Correct me if I’m wrong, but I haven’t seen a program that would malfunction due to a missing empty directory, except of maybe few DOS games from the late nineties.

The Easy Part

Okay, so that’s all fine and dandy, but all this information isn’t very helpful on its own. Hashes, SSIMs, and other weird stuff doesn’t tell you much about how to actually do our little spring-cleaning does it? Fortunately wise people have done the work for us, in a form of applications that take most of the cleaning work on themselves. Especially the hardest parts of it. But we’ll get into that in just a minute.

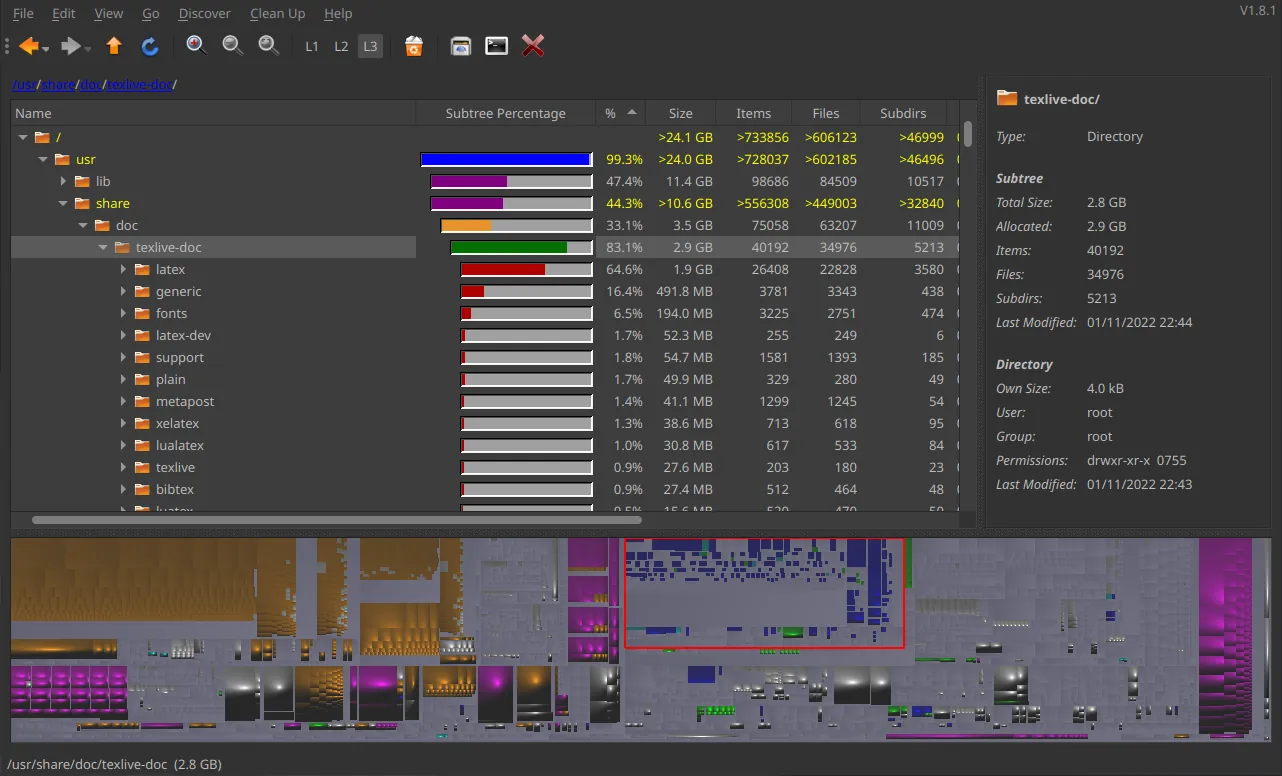

When I’m doing spring-cleaning, the first step I always do is to fire up QDirStat1 and scan the file system for largest files. The program presents you with a nice interactive graphical overview on size of all files residing on the scanned path. I inspect them all, starting from the largest, and decide if it’s worth keeping or can be removed. Usually the first files that go are those from my downloads directory, as this is the most likely the location of files that are either outdated or huge, and all of them usually can be obtained from the Internet at anytime. I immediately follow it with videos that I downloaded with yt-dlp. That’s an instant reclamation of at least several gigabytes.

QDirStat’s main interface.

Then I start cleaning up project paths from all sort of junk, like Houdini’s backup .hiplc and .hdalc files (by default Houdini saves a backup whenever you save a scene or an HDA), leaving only one backup save per day for each scene.

Next are Blender’s /.*\.blend[\d]+/gm (regex) backups.

Lastly old incremental saves created by other programs that I use in the pipeline, but like with .hip backups, I always keep a minimum of one file from an arbitrary period.

If I know that some versions of simulation cache are outdated, and will not be used anymore, I purge them as well. They always take a whopping chunk of disk space.

After that, I review my projects path in order to find projects that are already completed and can be archived, compressed, moved to external backup drives or other media, or can be simply deleted.

If you’re archiving a project for an undefined period, it’s important to follow symbolic links (symlinks) while doing it.

Otherwise, the project will not be stand-alone and will break when moved to another machine due to broken symlinks.

Tar’s dereference option is helpful in this regard.

The Real Deal

Now onwards to meat and potatoes of spring-cleaning — nasty duplicates and similar files. Like I mentioned earlier, several programs exist that can take the bulk of the work from you. But, depending on your skills and discipline of maintaining your file system in a tidy state, you will still spend from several hours to even several days cleaning it up. Yup, you heard right.

First and most importantly, use Free Software only. Period. Never ever use proprietary closed-source software to scan your hard drives, especially if you’re working under a non-disclosure agreement (NDA). You can never be sure what such black-boxed programs do under-the-hood, and it doesn’t matter if they’re “freeware”, “freemium” or paid software — if they’re closed-source and non-free — they’re potentially malicious. With free (as in “freedom”) software the source code is open to the public and can be audited anytime and by anyone, including you and me. If possible, prioritize binaries from your distribution’s repository over other sources, like manual compilation from upstream, AppImages, Flatpaks. Finally, never run duplicate finders as root.

Over the years I used three FOSS (Free and Open Source) duplicate finders. When I was still on Windows, I used DupeGuru, but it was so long ago, that I really can’t say much about the program anymore, except that it worked pretty well, if I recall correctly. It features comparison of file hashes and lookup of similar images.

After moving to GNU/Linux, I stumbled upon FSLint and have used it for a couple of years until it disappeared from Debian’s repository at the end of 2019, probably because the upstream stopped maintaining the software. It was a good program, very easy to use, but it lacked the ability to compare similar media files (not that I use this feature often, or ever).

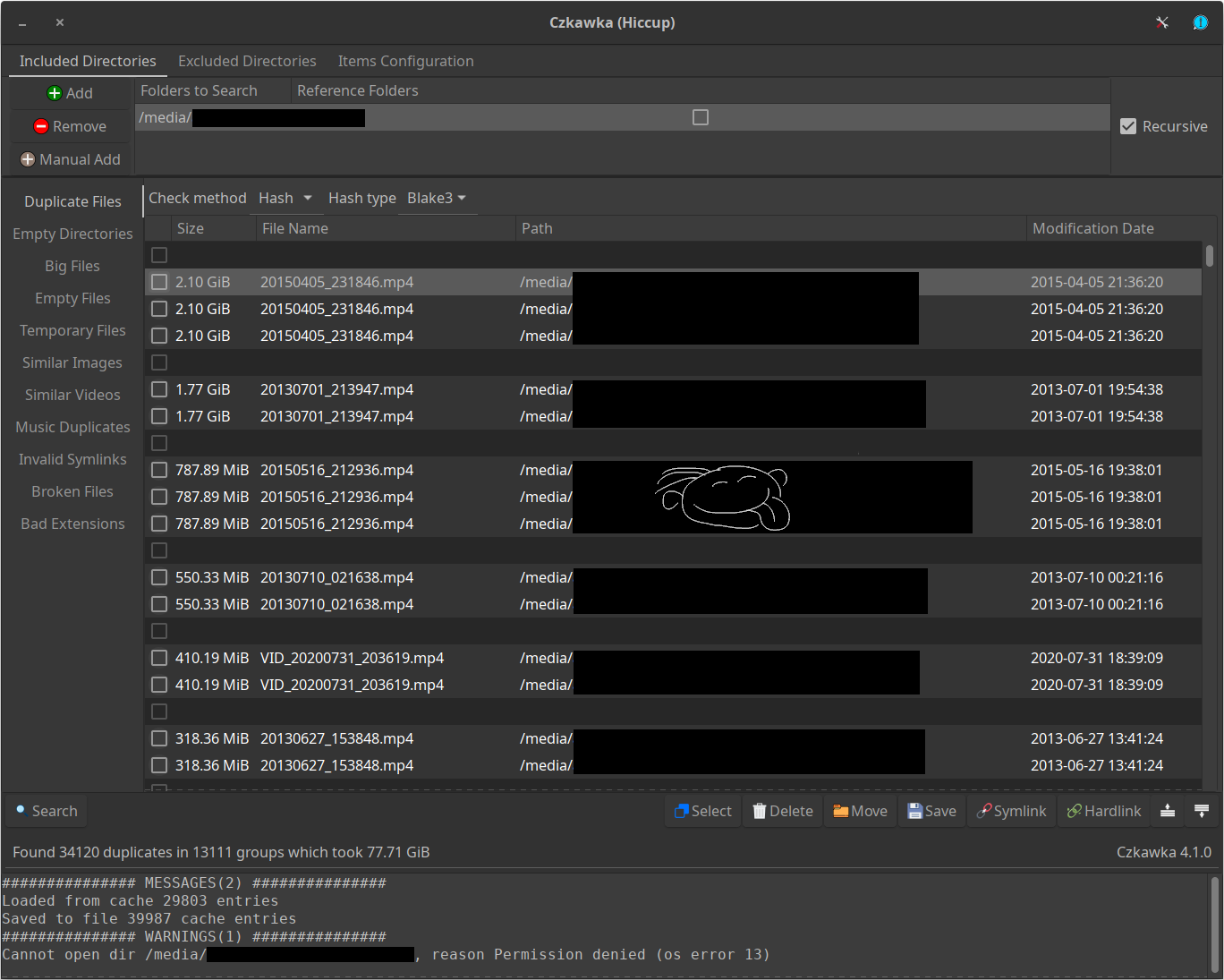

Czkawka

For some time now I’ve been using Czkawka (Polish for “hiccup”) created by Rafał Mikrut. It is said to be a successor to the venerable FSLint, but implemented in Rust instead of Python and therefore, according to benchmarks on project’s GitHub page, much faster than alternative programs. It also sports many additional features when compared to its predecessor, like media comparison tools to name just one. At the moment of writing, its interface still feels a bit unpolished (it lacks RMB context menus), but it’s a great and helpful tool.

If you’re a former user of FSLint, you will feel very comfy with Czkawka. If you’re not, here’s a very brief overview. The GUI is divided into five main parts. The upper section (1) contains an icon for accessing program settings, several tabs, which allow for picking paths that Czkawka will operate on, excluded directories and path patterns. It is possible to mark a path as a Reference. What it means is that files residing in this path will be treated as “originals” and therefore the program will not look for duplicates in that directory and will prevent you from deleting any of those files.

The second (2) section contains a collection of operations that Czkawka can perform. Personally, I use only Duplicate Files, Empty Directories.

Czkawka’s main interface window.

Contents of the third section (3) is shown after Czkawka finishes performing a designated operation. The list gets filled with search results and allows the user to pick items for review or deletion.

Fourth (4) section contains several buttons that due to the lack of RMB menu, you will be using frequently. Two most important buttons are Select and Delete. More on them later.

The final section (5) is located at the bottom of the program’s window and displays the log from program’s operation.

Dealing With Duplicates

I never do a full file system scan.

I limit it to only $HOME, /mnt and /media.

I trust my system and I know that package maintainers are not littering it with duplicates.

Besides, in order to clean up everything but those three locations, I’d have to run Czkawka as root, and it would be a violation of my rule.

My routine is to first search for actual file duplicates and then, after they’re cleaned up, scan for empty directories. I usually do not scan for similar media files, as I don’t have a tendency of leaving transcoded media lying around. The time that it takes to find duplicates depends on six major factors (listed in random order):

- single core performance of your CPU (as far as I know, Czkawka isn’t capable of multi threading yet),

- chosen hashing algorithm,

- number of duplicate files in scanned paths.

- data size,

- storage type and its performance (on SSD disks calculating hashes is extraordinarily fast, on SATA HDDs — not so much),

- storage connector type (USB 2.x, USB 3.x, SATA, eSATA, etc.),

Hash calculation is resource heavy, but Czkawka tries to shave some time off with a couple of tricks. It starts by comparing sizes of all files in order to reject those that are unique. After this, it calculates partial file hashes (prehashes) of files that have identical size. A partial hash is a hash that is calculated only for a fraction of file contents. If, for example, partial hashes of the first 64 kB of data of two files are different, then we can be sure that those files are different without having to read their full contents (unless the algorithm we use is not very resistant to hash collisions). And only then the program proceeds to calculating full hashes of file paths that were returned by the prehashing function.

So, I start by adding paths that I want to search for duplicates. Optionally, I mark one or more paths as Reference. I don’t change the hashing algorithm and always use Blake3. Then I select Duplicate Files from the operations list and hit the Search button. I suggest staying by the computer at least until calculation of full hashes start as at that point by looking at the progress bar you can judge how much time the process will take. Then you can decide to either grab a volume of “War And Peace” or go for short stroll in the park.

When the program eventually finishes its operation, you will be presented with a list of file groups that Czkawka considers as duplicates. The list is sorted by the amount of wasted disk space, starting from a group of duplicates that take up the most of your hard drive, or whatever storage medium you have analyzed. Every file in a group has a checkbox on its left side. Marking the box will tell the program to delete this file when you press the Delete button. If you accidentally mark all files in a group for deletion, Czkawka will warn you that proceeding with the operation will remove all copies of a given file, which is usually bad unless you don’t care about them. So be careful.

Search results as received from the censorship department.

If I remember correctly, with FSLint, when you right-clicked a file from a group, a context menu appeared allowing you to select all files from that directory. This was a great time saver because if you suspected that a specific directory contains duplicates, you could mark all of them in a second. The current version of Czkawka, which is 4.1.0, still doesn’t have an RMB context menu, so in order to mark all files from a specific path for deletion, you need to click the Select button and then choose Select Custom. This opens a modal window which allows for selecting files, directories and paths using a pattern. The pattern can be a glob (a wildcard) or a regex.

The truth is, I could never figure out how Name and Path pattern selection works. It would appear that those fields accept wildcards, but no matter how valid my glob pattern were, Czkawka never followed it, and as a result, never selected files I intended to be selected. What does work though, is the Regex Path + Name. But be careful with this if you aren’t familiar with regular expressions, as you can unintentionally select files or whole directory trees, where you didn’t intend to delete files from. Pattern selection saves a great amount of time as it relieves you from having to mark checkboxes manually. If you know that all files from a specific path can be deleted, use patterns to your advantage.

I usually work in chunks by selecting a couple of screens of duplicates that take up the largest amount of space, and then blasting them into oblivion. Lather, rinse and repeat. Depending on how many duplicates are there in paths that you compare, manual deletion can take from one to several hours.

Instead of deleting duplicate files, you may also change them into soft symbolic links or, if they reside on the same file system, hard links. I use hard links a lot in my project’s path in order to reuse the same data (like textures, geometry, etc.) in many projects without wasting additional disk space and, at the same time, keeping projects encapsulated into stand-alone units that will work even when pushed to other machines.

More often than not, I tend to split the cleanup into multiple days, because I rarely have several hours of continuous free time, that I’d like to spend on this kind of task. I like creating order out of chaos, whether it’s real clean ops, or a digital one, but up to a point. Thankfully, after each consecutive cleanup, it takes the program less and less time to calculate hashes, as the number of duplicates diminishes.

I don’t bother with small files, like those below 100kB or less, especially if there are hundreds of them. Unless of course, I can easily select them by typing in a regex pattern, or I’m desperate of free disk space.

Cleaning Up Similar Media Files

Steps to perform this operation are similar to those from duplicate finder. First you select paths to analyze, then a resizing algorithm, hash size, its type, level of similarity and a couple of flags. All these settings are well documented in their tooltips, so read them if you want to know how do they affect the compare operation. Program’s defaults are optimal in most cases and I never needed to change them.

When you hit the Search button, it will take program some time to deal with numeric calculations and eventually it will present you with a list of images it considers to be similar. When you LMB-click on each entry, Czkawka will show you a preview in the rightmost pane. You do selections and deletions in the exact same way as you did when you looked for duplicates, so I won’t go into details here.

I found this function especially useful when I’m collecting reference images from the Internet, as I usually end up with multiple similar images in various dimensions or formats depicting the same thing. One pass with the program is enough to find and delete them.

Please note that Czkawka versions prior to 5.0.0 do not support WebP format.

Removing Empty Files And Directories

You can find and remove them using either Czkawka or system tools. Explanation of how to do it using the former is unnecessary, as you only choose it and run it. There are no settings for it.

If you feel doing it with system tools, then find has all you need.

The command below will print relative paths of all empty files in the current working directory:

find ./ -type f -empty

To print empty directories in the current working directory you would use:

find ./ -type d -empty

In order to get abosolute paths, replace ./ with an absolute path.

Now, to remove them automatically, you would need to pass the result to command responsible for removal of directories and/or files. For example:

find ./ -type d -empty -exec rmdir {} \;

Fixing Symbolic Links

As far as I know, Czkawka can only remove invalid links, so this step I do manually by first listing all invalid links in a given location:

find ./ -xtype l

And then fixing those guys that I want to be fixed by relinking them to new targets or by deleting them if I don’t need them anymore.

ln -sf target_path symlink_path

Prevention

I think that completely preventing digital garbage from accumulating is impossible. You might be very disciplined in putting files in appropriate directories, you might always remember to remove temporary files immediately after they are no longer needed, you might perform regular cleanup operations at the end of your working day, but unless you are autistic or have an OCD, the trash will always show up. Always. The truth is that the only time we don’t generate trash files is when we’re not working.

Of course it is.

(Edited version of a photo originally taken by Kristin Dos Santos, CC BY-SA 2.0)

If you think that digital garbage tends to pile up way too quickly on your computer, then perhaps it’s worth investigating your directory structure. Maybe even a slight change in its hierarchy would make it easier to maintain the order. Try to name your files properly, but at the same time try to resist yourself from using long file names. Instead, take advantage of directory nesting. A file placed in appropriately named nested directory can have a much shorter name and becomes easier to find and identify.

Use /tmp to your advantage.

It really works wonders.

Refrain from storing files and directories in your desktop path.

And if you must use it, treat it as a place for short-term storage only.

-

Alternatively K4DirStat, or text-only

du. Windows users will resort to WinDirStat. ↩︎